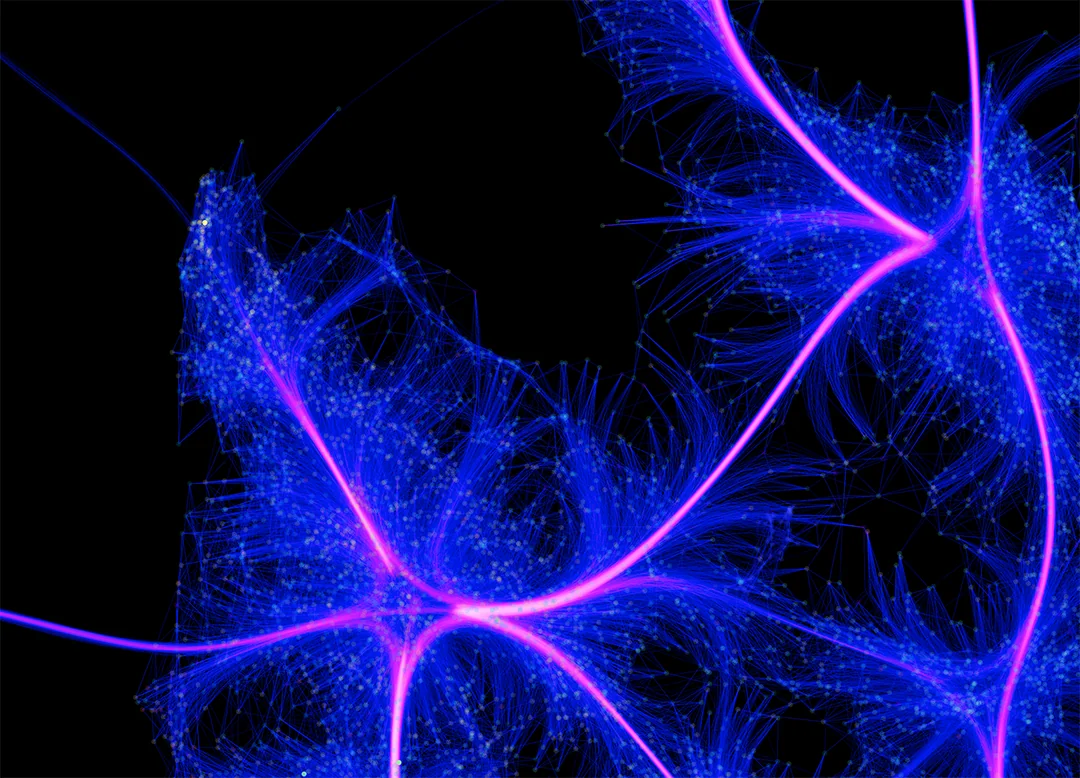

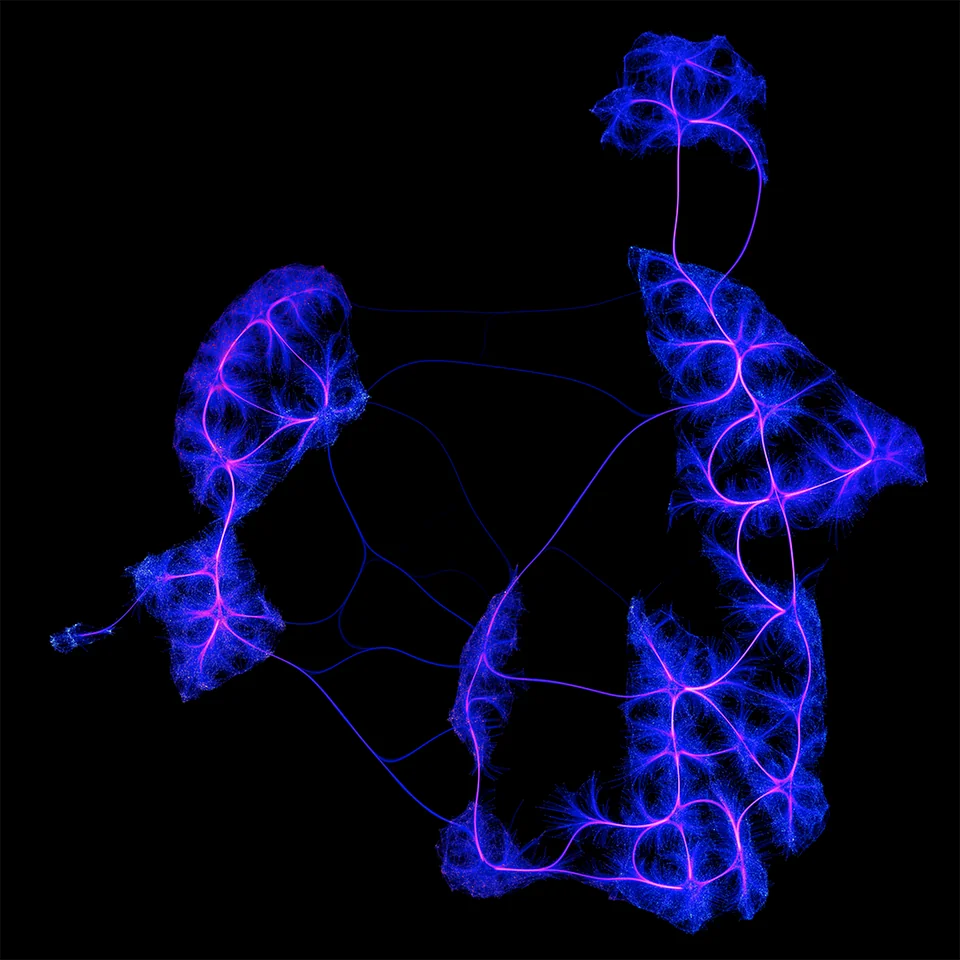

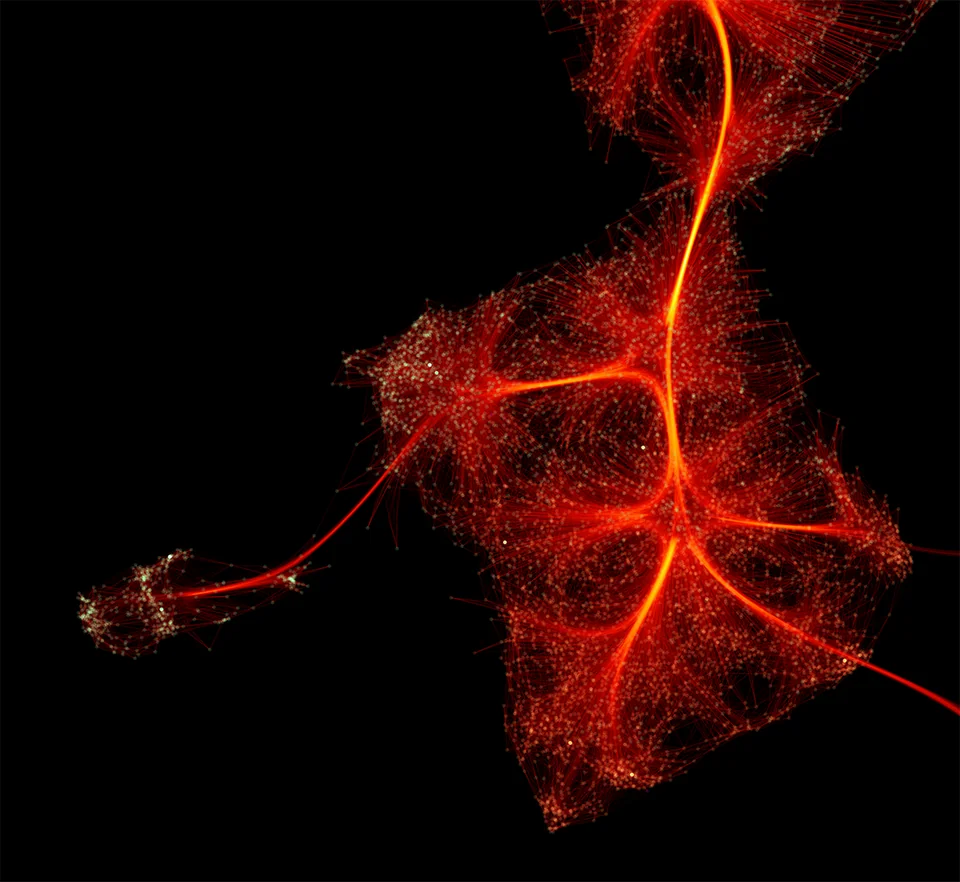

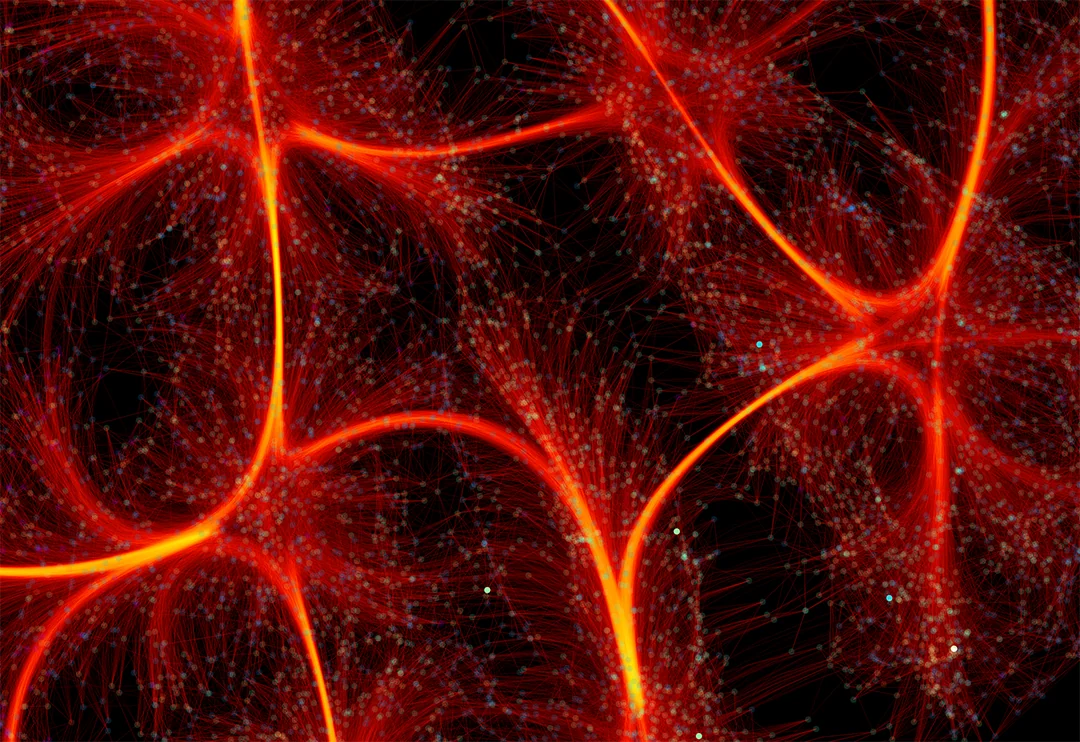

UMAP Network Connections

These graphs show the connections between different images as understood by a deep learning neural network - a Variational Autoencoder (VAE). The dataset consists of 60,000 low-resolution images of fashion items (shoes, shirts, bags, etc.). Each tiny dot in the graph corresponds to an image and the edges represent similarity relationships between those images. Images that are close together in the graph are similar to one another as understood by the neural network.

To generate this picture, I train the convolutional autoencoder on the fashion-MNIST dataset, use the trained network to extract 256-dimensional representations of each image in the dataset, then use Uniform Manifold Approximation and Projection (UMAP) to compress those representations down into 2 dimensions. UMAP internally maps the data points onto a graph, the edges of which are represented in this image. To generate the visual, I used a slightly modified version of the plot connectivity utility in the umap-learn python library. Code is available on github.

See more at this reddit post.